Spring 2012 - Vol.7 - No.1

The Evolving Role of Radiation-Dose Considerations in Diagnostic Imaging

Anthony D. Montagnese, M.S., DABR

Certified Medical Physicist

Lancaster General Health

DISCOVERY AND PROMISE

When Wilhelm Conrad Roentgen delivered his seminal paper on the production of x-rays in 1895, even he could not have envisioned the potential of his discovery for the field of medicine. Yet, within mere months of his address, his “invisible rays” were being used to localize foreign bodies in surgical procedures.1 Within only a few years, at least one Crookes x-ray tube for imaging had been installed in most important medical facilities.

As more and more physicists and physicians explored the promise of this new technology, an apparent dark side was also revealed: these “x rays” were capable of causing biological damage. Without regulatory restraint or historical perspective, scientists had been exposing patients, volunteer subjects, and even each other to relentless experiments with unshielded beams of radiation. Within five years of Roentgen’s speech, cases of skin erythema, eye damage, and epilation were regularly being reported among researchers.2 This began a long period wherein growth in the field of Radiology outpaced that of Health Physics and Radiation Safety.

The imaging aspect of this new medical science continued to develop and expand throughout the early part of the 20th century with such additions as radiographic film to replace glass plates and real-time imaging with fluoroscopy; however, understanding of the benefit-to-risk ratio of x-ray imaging remained skewed by the lack of empirical evidence of late-term (i.e., stochastic) effects of irradiation. Though acute, short-term (non-stochastic) effects continued to be observed, they were considered temporary and a small price to pay for the diagnostic information obtained. In fact, scientists “calibrated” early x-ray tubes by determining how long it took to develop skin erythema post-exposure!3

The detonation of atomic bombs in Hiroshima and Nagasaki in 1945 awakened a new sense of alarm regarding the potentially harmful characteristics of ionizing radiation. In the late 1940’s, the Atomic Energy Commission (AEC), precursor of today’s Nuclear Regulatory Commission (NRC), was formed to regulate radioactive material and to evaluate its effects on health. Much of their work and subsequent regulation, however, focused on limiting the radiation dose to individuals who are exposed in the course of their occupation, not as patients. Then, as now, these agencies purposely chose to not impose limitations on the radiation dose to individuals from diagnostic imaging procedures or therapeutic radiation, insisting that those matters were solely the purview of the physician. To date, neither single-exam nor patient-cumulative radiation dose limits for diagnostic imaging are set by regulatory agencies. The sole exception is screening mammography, which restricts the dose from a two-view screening mammogram to less than 200 milliGray. (A Gray is the international dose unit of absorbed ionizing radiation.)

Recent Developments

Throughout much of the 20th century, there was a steady but gradual increase in diagnostic imaging procedures, facilities, and technology. The equipment became more affordable and reliable even as Radiologists and other specialists discovered new uses for it. Nonetheless, the standard film radiograph remained the primary application of radiologic imaging throughout the century.

In 1974, British engineer Godfrey Hounsfield developed and produced the first use of “digital” imaging with his Computer-Assisted Tomography (CAT) scanner. The first such scanners utilized fan beams of radiation and rows of opposing detectors to digitally reconstruct a two-dimensional cross-section image of the patient, but they found only limited application due to limitations in the heat capacity of the x-ray tubes and slow computer processing speeds (e.g., one “slice” image usually took 3 – 4 minutes to reconstruct in early scanners). Still, the potential of this instrument was apparent, and CT scanners quickly evolved as computers became increasingly powerful and fast, eventually adding the ability to spiral through a patient volume with incredible speed.

As CT scanning became a faster, more complete imaging modality, the standard radiograph was also undergoing significant change. In the 1990’s, the detector of choice moved from film to digital receptors, which allowed not only economic savings in supplies and physical storage (film, darkroom supplies), but added the capability of storing and sharing image files electronically. Picture Archiving Systems (PACS) were born, eliminating the need for copying and transporting x-ray films to outlying medical offices.

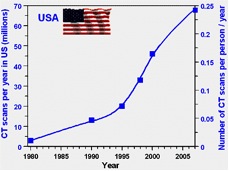

All these relatively recent advances in imaging technology and affordability made the prescription of diagnostic imaging exams by physicians more attractive than ever. As a result, the number of imaging procedures performed in the United States has sky-rocketed since the early 1980’s. The greatest increase has been in the number of CT exams performed, which have increased exponentially (Figure 1).

Figure 1: Increase in CT scans per year since 1980.4

Understanding Radiation Dose and Effects

While advances in digital imaging and computer power have driven a rapid increase in the number of exams, there has been no parallel increase in education of physicians. It is important to understand that there are now two non-film ways to do standard x-rays: “computed radiography” or “CR”, and “digital radiography” or “DR.”

In CR, the film/screen cassette is replaced with a cassette that contains a detector. As in conventional radiography, the cassette is placed behind the patient and the exposure is made. The cassette is then processed through a machine that “reads” the screen inside the cassette and creates the image through computer processing. This is a popular solution for facilities that have older x-ray machines, as they can simply substitute this newer cassette for the old film/screen cassette.

Digital radiography (DR), on the other hand, completely eliminates the need for a cassette as the digital detector is built into the x-ray machine directly. After an exposure is made, the information is delivered directly to the computer without intervening steps. Contrary to common assumptions, computed radiography (CR) deliver a higher radiation dose than the old film/screen radiographs it replaces because the digital detector cassette requires a higher dose to create an image. DR, in contrast, delivers a radiation dose similar to the old film/screen radiographs. Finally, CT scans remain the studies with the highest radiation dose.

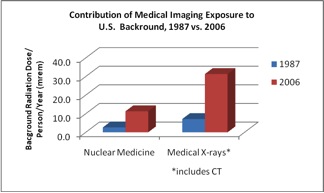

These facts are most vividly illustrated by the overall increase in the contribution to background radiation dose in the USA between 1987 and 2006 from diagnostic imaging procedures. (Figure 2)

Figure 2: Increase in contribution to background radiation dose from medical imaging.5,6

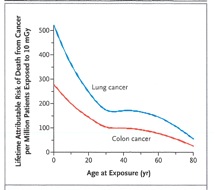

Still, this meteoric increase in diagnostic imaging was generally viewed only in the light of its improved availability and convenience for clinicians. Then, in November of 2007, David Brenner and Eric Hall published an eyebrow-raising paper in the New England Journal of Medicine which predicted a statistically significant increase in cancer rates in the U.S. from current use of CT scans.4 The authors estimated that as many as 1.5% - 2.0% of future cancers might eventually be attributable to radiation from CT scans. Chief among their concerns was the overall increase in use of CT, repeated studies, unnecessary exams, and exposure of children (Fig. 3).

Brenner and Hall’s paper was picked up by the popular media, which suddenly made CT radiation dose front page news, particularly for parents of pediatric patients. It has now become commonplace for Radiologists, technologists, and certainly radiation safety experts to field calls and questions from patients regarding radiation dose from imaging exams. This poses a challenge, since studies such as Brenner and Hall’s use statistical models based on large population groups and a linear, non-threshold model of risk. In other words, while their risk findings may be valid for the epidemiology of a large population, they cannot be filtered down to express the risk to one individual. Thus, it becomes difficult to try to convince a patient of the tremendously positive benefit-to-risk ratio that still exists for an individual exam, while also expressing efforts to reduce radiation doses as a means to lower the overall population risk.

Figure 3: Estimated dependence of lifetime radiation-induced risk of cancer on age at exposure for two of the most common radiogenic cancers.4

The Move toward Understanding Radiation Dose as Core Medical Knowledge

In August, 2011 the Joint Commission on the Accreditation of Health Organizations (JCAHO) published a “Sentinel Event Alert” (Issue 47) to specifically address their increased concern that health facilities should have adequate safeguards, policies, and procedures in place to keep the radiation dose from diagnostic imaging at reasonable levels. That publication echoed many of the same statistics and studies mentioned herein, and it joined a litany of national and international organizations that called for greater restraint and understanding of the population risks inherent in imaging with ionizing radiation.

Common among the recommendations of all of these groups is a call for greater training, knowledge, and understanding by physicians from all subspecialties of the following:

1) the radiation dose levels typical of various exams;

2) the opportunities for obtaining diagnostic information through other (i.e., non-ionizing radiation) means, such as ultrasound, MRI, or laboratory exams;

3) the use of electronic records that allow for easy review of recent imaging history, as a means to reduce unnecessary exams.

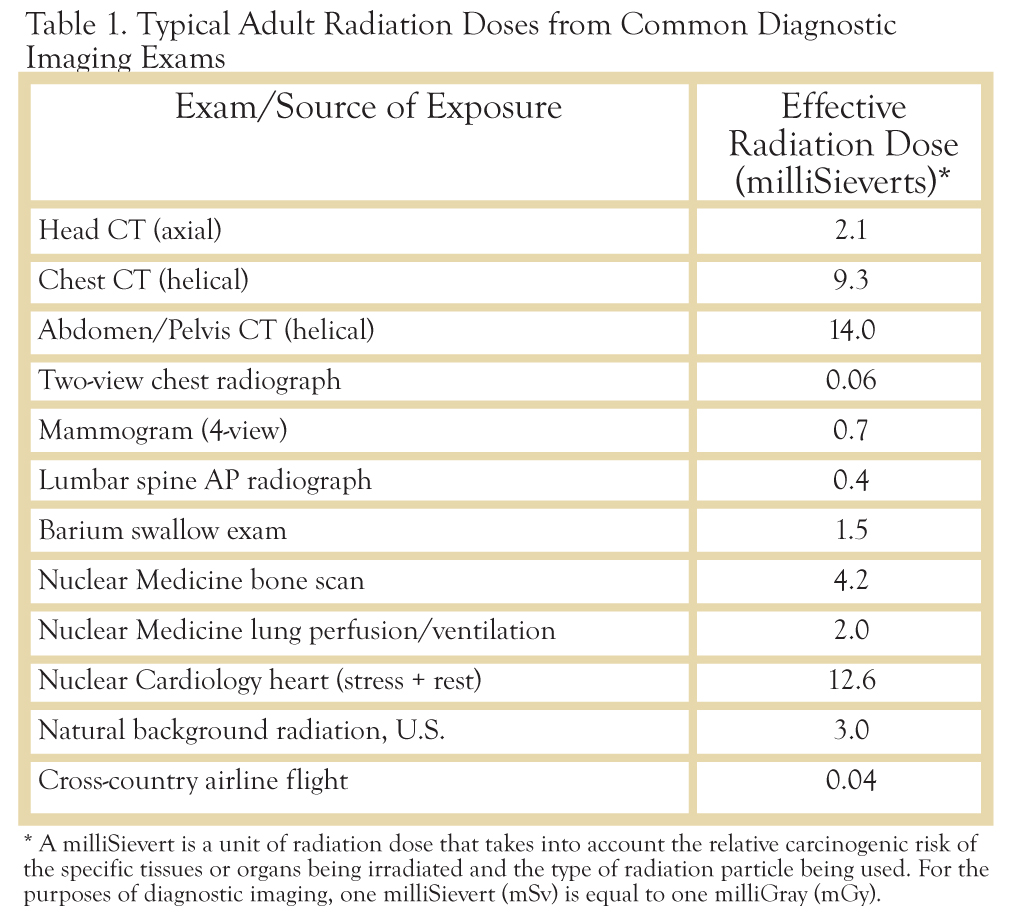

It is this author’s supposition that future medical school coursework will include more detailed information on the subject of radiation dose and risk as core material, no longer relegating it to the singular domain of the Radiologist. The ideal, of course, would be for future physicians to prescribe diagnostic imaging exams only after consideration of a patient’s exposure and image history. Information such as shown in Table 1 below, which summarizes typical radiation doses to an adult from some common exams, should be made readily available to physicians, and referenced as common practice.

While this list is by no means complete, it clearly illustrates the magnitude of difference that can be seen in radiation dose between the various imaging modalities available to a physician. Naturally, the radiation dose should not be the sole determinant when it comes to selecting tools for diagnosis. As Table 1 shows, the radiation dose from a CT scan of the chest is nearly 200 times that of a two-view radiograph (x-ray) of the chest. Yet, it cannot be ignored that the CT exam provides significantly more diagnostic information than the two-dimensional chest exam. The benefit-to-risk ratio is still greatly positive for both exams. Instead, the ordering physician will need to become familiar with these relative dose values and recall that the higher dose exams should be prescribed frugally, not just in consideration for the individual patient, but for the population as a whole.

The aforementioned JCAHO “Sentinel Event Alert” also recommends that the radiation dose to an individual from each diagnostic imaging exam become an integral part of the patient’s medical record, again as a resource for physicians. While this may be an admirable goal in theory, it is not currently a viable option for a number of reasons. First, despite the technological advances discussed earlier, most modern diagnostic imaging equipment cannot measure and record radiation dose for each patient, from each exposure made, in an accurate fashion. There are too many variables in the imaging chain (x-ray source → patient → detector) that make any such attempt merely a “best guess”. Second, any such collection of dose information may not include data from exams performed at other facilities, which would be needed to create any kind of “total dose estimate”. Third, at this time, most physicians are not comfortable enough with the units of radiation dose and their meaning relative to risk to want to make patient care judgments based on such information; i.e., “There’s the dose, but what does it mean?”

Future Practice Considerations

Rather than memorizing radiation dose values, it makes far more sense for physicians to consider the following before ordering a diagnostic imaging exam:

1) Has the patient had the same exam recently enough to preclude the diagnostic value of another one so soon? It should be remembered that a review of the patient’s chart may or may not reveal exams performed at other facilities.

2) Can the desired diagnostic information be obtained without the use of ionizing radiation, such as with MRI or ultrasound? In this regard, the counsel of a Radiologist may be of significant help. In addition, the American College of Radiology publishes an “Appropriateness Criteria” to aid in such decision-making.

3) If deemed necessary, can the diagnostic exam be altered or restricted to reduce dose? This is of particular importance for pediatric imaging. An excellent resource in this regard is “Image Gently”, a nationally-recognized program from The Alliance for Radiation Safety in Pediatric Imaging which aims to raise awareness and offer solutions relative to radiation dose in pediatric imaging.

Conclusions

Diagnostic imaging with ionizing radiation has been an invaluable tool in medicine for over one hundred years, and appears positioned to remain so in the foreseeable future. Nonetheless, as research continues to surface about the population’s potential cancer risk from unfettered use of such imaging, the cry for increased discretion and oversight gains justifiable footing. In the future, clinicians can expect to have a greater onus of responsibility to monitor their patients’ imaging and radiation dose histories, and to make educated choices among the diagnostic tools available. A basic understanding of radiation dose units, relative exam doses and risks, and the resources provided by alternatives, will become a common practice requirement.

REFERENCES

1. Assmus, Alexi; “Early History of X-rays”; Beam Line (pub. of the Stanford Linear Accelerator Laboratory); Vol. 25, No. 2, Summer 1995.

2. Kathren, R. and P. Ziemer, Eds.; Health Physics: A Backward Glance; Pergamon Press, 1980.

3. Inkret, W.C., C.B. Meinhold, and J.C. Taschner; “Protection Standards”; Los Alamos Science; No. 23, 1995.

4. Brenner, D.J, and E.J. Hall; "Current concepts—computed tomography—an increasing source of radiation exposure"; New England Journal of Medicine; Vol. 357, No. 22; Nov. 2007; pp. 2277 – 84.

5. Health Effects of Exposure to Low Levels of Ionizing Radiation: BEIR V; National Academy Press, 1990; 18 – 19.

6. Health Risks from Exposure to Low Levels of Ionizing Radiation: BEIR VII, Phase 2; The National Academies Press, 2006; 4–5.

7. Sentinel Event Alert, Issue 47; The Joint Commission on the Accreditation of Health Organizations; August 24, 2011. www.jointcommission.org

8. American College of Radiology; “Appropriateness Criteria”; accessible at http://www.acr.org/ac.

9. The Alliance for Radiation Safety in Pediatric Imaging; “Image Gently”; accessible at http://www.pedrad.org/associations/5364/ig/